Apple’s move to using SSDs across the iMac lineup instead of hard drives offer considerable speed benefits to users. AppleInsider explains what these terms all mean to Mac users, and how they apply to your computing needs.

Storage on computing devices, including desktops, notebooks, and mobile devices, is a precious commodity that could easily be filled up by some users. The need to keep entire digital lives on a single piece of hardware can tax many configurations, and prompt the need for either an upgrade in storage or a replacement of the device itself.

For Mac users, this filling up of capacity can lead to an exploration of drive upgrade options that they may have for their Mac. However, for the uninitiated, the various choices available on the market, as well as the less-than-clear terminology, could make it intimidating to look into with any real depth.

Furthermore, not every drive option available to purchase will necessarily be the best option for the consumer.

With the removal of the option to include a hard drive in the 21.5-inch iMac in favor of Fusion Drive or SSDs, there’s now more of a need for beginner Mac users to understand how storage works.

Here’s AppleInsider‘s guide to the basic differences between storage components, as well as some of the more confusing technology elements that you may need to know before plumbing the depths of drive options.

Hard drives: cheap but slow

Hard drives have been around for decades. When introduced, they were an order of magnitude faster than the prevalent removable storage of the day — floppy drives

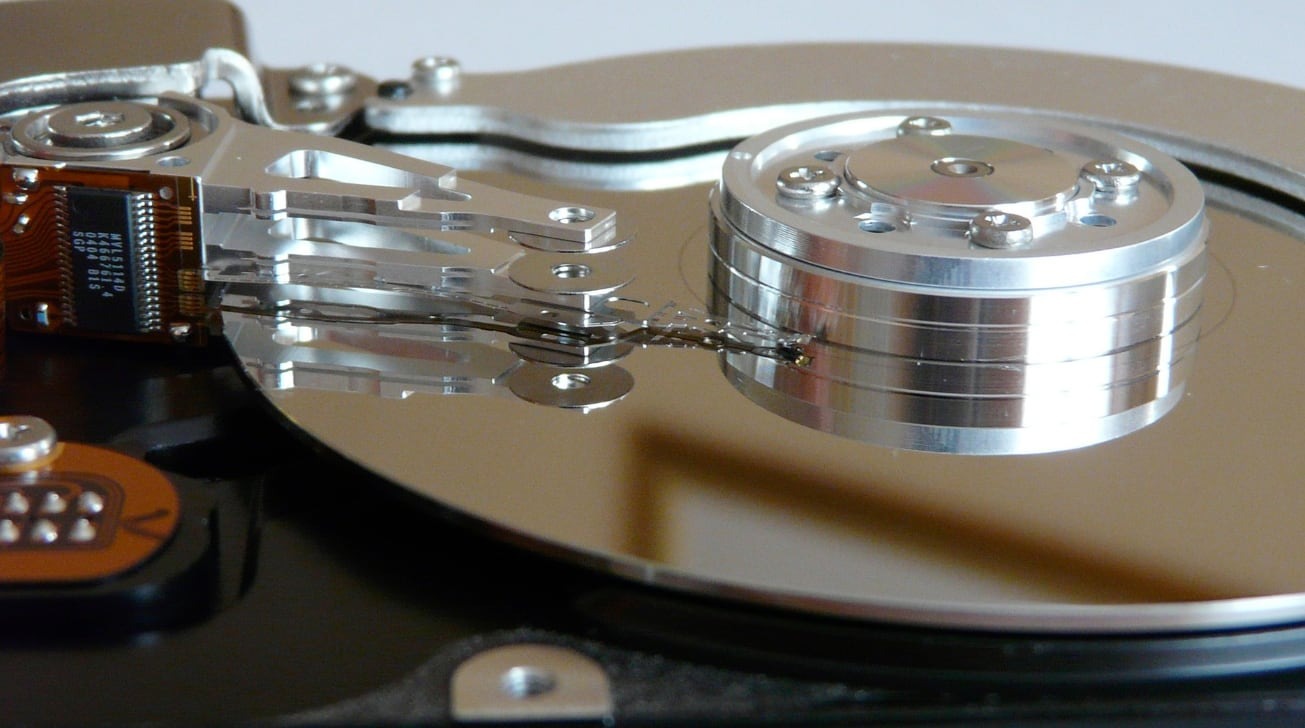

A hard drive consists of layers of platters with a magnetic coating being spun around at high speed, while a read head passes over the layers to both read and write data onto the platters. This is somewhat similar in concept to a record player, except with multiple platters, higher spin speeds, and the ability to write to the platters.

A hard drive platter with a read head floating above.

Due to their mechanical nature, hard drives have their limitations. For a start, the need to spin the platters and move the head to the right location means there will be a lag in speed, which can be further compounded by a drive where the files are fragmented in random locations instead of in sequential order, causing the drive to keep searching for the next part of data it has to read.

The slowness is also impacted by the actual spinning speed of the platters themselves, with typical speeds for drives being either 5,400RPM or 7,200RPM, though some are capable of 10,000RPM or higher. These higher rotation speeds can result in faster data reading, but they are also commonly more expensive.

Old 2.5-inch hard drives including some used in Apple hardware.

A motor spinning disks at such speeds can also make the drives noisy, with people able to hear the drive spinning away and the read and write heads moving, while under load. The spinning also generates heat, which can contribute to overall computer temperatures, which in turn can cause issues elsewhere.

Spinning drives are also susceptible to problems involving motion, with users generally advised not to move notebooks containing hard drives while they are operational. Jolts and drops can also cause other problems, such as making a read head briefly touch the surface of the disk, which can destroy data under some circumstances.

So if they’re slower, noisy, and potentially more fragile, why keep them around? Price and capacity.

On a per-gigabyte level, it is hard to beat the hard drive, with modern versions capable of holding multiple terabytes of data at a relatively reasonable cost. This comes from the decades of development of the technology, which is still seeing improvements to how much they can handle.

They are also ideally suited for situations where capacity is prized over the speed of access. For example, a file server on a network or a network-attached storage device may want to be loaded up with high-capacity drives for backing up files. As they use the local network for data transfers, access speeds aren’t essential, so newer and more advanced technologies that have faster access times lose their advantage.

Until other storage technologies can offer comparable capacities without a massive expense, hard drives will probably stay around for quite some time.

Solid state drives: fast but expensive

The newer storage type, solid-state drives or SSDs are non-mechanical drives that rely on flash memory chips to store data. An embedded processor manages the data stored on the flash memory chips, allocating where new data is written and handling the retrieval of data.

Since this is a completely electronic process, this means there are no mechanical systems at play that could slow down the reading or writing of data to the drive itself. The latency of the drive starting to read or write data is minimal, and when combined with the much faster reading and writing times, this gives SSDs a considerable speed advantage over hard drives.

The introduction of SSDs as the boot drives of computers enabled for booting times for Macs and PCs to descend from around a minute to a matter of seconds, as well as dramatically cutting down waiting times for accessing files stored on the drives.

An SSD can be a welcome upgrade from an older Mac’s mechanical drive.

The lack of mechanical elements means an SSD is also practically silent when in use, as well as generating less heat.

Since the SSD consists of chips soldered to a board, they are more durable, making them more suitable for notebooks and other applications where movement is anticipated.

The only real downsides of an SSD are cost and capacity, which is in part due to it being a relatively newer technology compared to the much older and more mature hard drive.

For comparison, a 1TB SSD could be acquired for around $100 at the time of writing. A 1TB hard drive costs as little as $40, or for roughly the same price as the SSD, a person could instead acquire a 3-terabyte hard drive.

The difference is more evident at higher capacities of storage. At 8TB, an SSD could easily cost $1,000 or more, depending on model and retailer, while a hard disk of the equivalent capacity could be acquired at around the $200 level.

In PC circles, this has led to enthusiasts acquiring both SSDs and hard drives, with the former used for the boot drive and the latter for storing less accessed or large files. In 2020, the cost-per-gigabyte for SSDs is now starting to reach the point where enthusiasts are moving to all-SSD systems, as the price premium for higher capacities can be more reasonable.

Fusion Drive and hybrid drives

In a bid to satisfy the consumer’s need for speed without sacrificing storage, hard drive producers came up with hybrid drives. In short, Fusion Drives or hybrid drives are hard drives that have a few gigabytes of flash memory added on, and a system that diverts data between the two locations, while still appearing to the computer as if it is one logical drive.

The flash memory is used to cache frequently-accessed files, such as system files, as detected by its onboard system. Cached files are considerably quicker to retrieve from this storage area, but requests for other files remain slower as they have to be fetched from the mechanical drive.

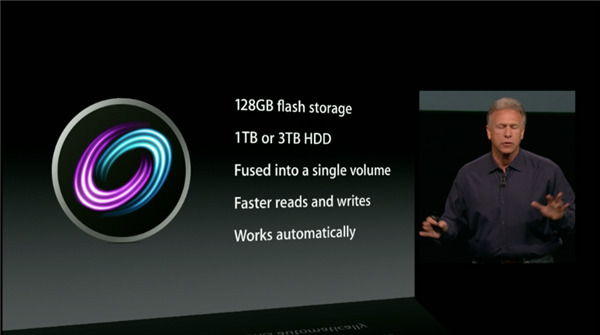

How Apple introduced Fusion Drive to the world.

Apple’s solution, Fusion Drive, fits within the banner of hybrid drives, combining an SSD with a much larger hard drive. This offers the same effect as a single hybrid drive, including faster booting times and speedier file access, but it reverts to the slower speeds for other non-cached files.

Apple does offer the Fusion Drive as an option on some Mac configurations, including the updated 21.5-inch iMac, with its cost compared to SSD upgrades making it quite attractive to users who want big storage without forking out the cash for the larger SSDs.

Drive connections: SATA and M.2

Attaching the drives to the rest of the computer can be performed in a variety of ways, but most people will come into contact with just a few: SATA and M.2.

The replacement for the old IDE cables of yesteryear, Serial ATA or SATA is the interface that allows a drive to connect to a motherboard or logic board, as well as to receive power. Instead of having a wide IDE cable that connects to two drives SATA instead uses one thin cable per drive for data, while power via Molex connectors is also replaced by a similar connection.

While IDE cables enabled data transfer speeds of up to 133MB per second, SATA brought in even faster transfers of up to 6GB per second, or a sustained throughput of up to 600MB per second.

The newer M.2 is another style of interface for drives, but one that involves directly mounting the drive onto the motherboard via a dedicated M.2 slot. This eliminates the need for cables to power or transfer data, making things neater and more compact compared to those that require cables.

Due to directly plugging in, M.2-based drives are usually made to be as small or reasonably thin as possible, and usually take the form of flash memory chips on a slimline board. While it would be technically possible for a mechanical drive to use M.2, the nature of mounting to the motherboard and the need to save space reserves the interface for SSDs.

While having a different name, M.2 SSDs tend to use SATA 6 for connectivity, meaning there isn’t any real improvement in transfer speeds at all, but the benefits of saving space will still be useful for computer hardware that lacks space to spare, such as notebooks.

An example of an NVMe drive that connects via M.2.

As an aside, there are also M.2 NVMe (Non-Volatile Memory Express) drives, which take advantage of the M.2 form factor, but work slightly differently. By connecting using the PCI-Express interface, the connection allows for even faster transfers.

For example, while an M.2 SSD drive may offer reading and writing speeds in the region of 500 to 550MB per second, the M.2 NVMe equivalent can function at a much quicker 3.5GB per second.

While this does make NVMe drives extremely handy for people who want high levels of performance, it does require a PCIe-compatible M.2 slot to be available. In some cases, it is also possible to add an M.2 NVMe drive via a PCIe adapter card, though again this would require the use of a PCIe slot if one is available.

This extra speed also costs more money, increasing the M.2 SSD’s equivalent cost by roughly 20% to 25%. Given the limited use cases for these high read speeds, such as video editing 4K footage, it may be more useful for a person buying storage to opt for the M.2 SSD over the NVMe, and potentially go for a higher capacity drive at the same time.

There is another way that a drive can be connected to a system: soldering it in place.

Over the years, Apple has moved towards soldering flash-based storage directly to the logic board of its Mac products, in order to create ever thinner devices. Older devices like earlier Mac mini models offered the opportunity to upgrade with a bit of effort, but newer models generally don’t have this capability.

For example, the teardown of the 2020 27-inch iMac refresh revealed the mechanical drive option wasn’t available, with the SSD was soldered to the logic board. Furthermore, there isn’t any form of expansion connection on the logic board pertaining to storage, effectively eliminating those sorts of upgrade options.

While people may be concerned about whether a drive is a mechanical version or an SSD, or whether it connects over SATA or M.2, there’s still more to consider about a drive. Namely how long the chips in an SSD will last for.

SLC, TLC, MLC

Like many other elements of a computer, there are a few varieties of components that could be used, but for consumers, there are broadly speaking three acronyms to care about: Single Level Cell (SLC), Multi Level Cell (MLC), and Triple Level Cell (TLC.)

The NAND flash memory chips that are used to store data on an SSD are made up of a number of cells, which each can hold bits of data, changeable by electrical signals. Depending on the type of flash, different amounts of bits can be saved per cell.

SLC is the most expensive, as only one bit is allocated per cell, meaning more memory chips have to be used than other versions for comparable capacities. Typically reserved for enterprise usage, SLC flash can have the longest read and write cycles, referring to the number of times each cell can be accessed, as well as being generally more reliable in the face of errors or extreme temperatures.

MLC, as the name suggests, is a cell that holds multiple bits of data in a cell, cutting the production cost significantly. The cost savings also mean it is less durable than SLC, with read and write cycles per cell hovering around 10,000 compared to the 100,000 of SLC.

A variant of MLC also exists called eMLC (Enterprise Multi Level Cell), which tries to bridge the gap between MLC and SLC by effectively being a more durable MLC. For eMLC, the cycles per cell count rise to around 20,000 and 30,000.

The cheapest type of memory to produce, TLC is capable of storing three bits in a cell. The cycle count is the lowest of the bunch at around 5,000 per cell, which sounds low but still equates to several years of usage.

If you’re buying an SSD off the shelf, it is more likely that the memory will be either MLC or TLC.

Sequential and Random access speeds

When looking at the specifications of a drive or a review, people may see references to sequential and random access speeds, along with figures relating to reading data from the disk and writing to it. In short, they are two different ways a drive could access data on a drive, which examines two specific scenarios.

Sequential access refers to blocks of data that are read in order, whereas random access involves pulling data blocks from multiple locations.

For hard drives, sequential access is preferential, as that would mean a series of blocks of data would be read in one go without having to wait for the read head to move to a new position and wait for the platter to spin around to the right start point for the next section. In cases where files are removed and leave gaps that can be filled with smaller files later on, this can lead to a loss of sequential data blocks.

This leads to a phenomenon called fragmentation, where the performance of a hard drive is hampered by the constant need to find where the next block of data is located after one section ends. In effect, the faster sequential access is eroded away, with activity resembling the slower random access.

That is why hard drives needed to be defragmented over time, allowing the system to reorganize blocks of data to ensure sequential access as much as possible. This can take hours, and requires a drive to copy file fragments to unused parts of a hard drive, before copying them back into positions that are more beneficial.

![This is an example of the extra work that may be required of a drive for random access versus sequential. [via Wikipedia]](https://photos5.appleinsider.com/gallery/37082-69459-sequential-v-random-xl.jpg)

This is an example of the extra work that may be required of a drive for random access versus sequential. [via Wikipedia]

Random access tests typically revolve around accessing blocks of data in different orders or positions than would normally be expected for sequential access. A hard drive would have to skip between positions in such cases, which is inefficient for the drive’s operation versus sequential access.

An example of this would be comparing reading data for a large movie file versus loading a game, with the latter using many small files a fraction of the size of the movie file. As these files may not be accessed in the same order as they were written to the disk, the drive has no choice but to skip around to access all the required data blocks in order, namely equating random access.

For SSDs, this is less of a problem due to the lack of mechanical components being the main slowing factor. Even so, there is still some variance between drives in terms of sequential and random speeds.

When looking at a drive, take into consideration the types of files that you intend storing on it. Large video files may benefit more from a drive with better sequential access speeds, while documents and tasks relying on numerous small files will generally be better on a drive with higher random access speeds.

Minimal upgrades and external options

For Mac users, the problem of upgrading their device is one where their choices are limited for the most part. The implementation of directly-soldered SSDs in Macs, especially in portable models like the MacBook Pro, rule out any possibility of internal changes.

In cases where there seems to be a chance for users to switch out the drives for something else, Apple has also put measures in place to hamper such attempts.

The introduction of the T2 security chip into Mac models has enabled Apple to increase the security on drives by having it handle encryption. Unfortunately that very same system also makes it almost impossible to detach removable drives without rendering the stored data inoperable.

In some cases, it isn’t even possible for normal users to upgrade even removable parts. For example, the iMac Pro does have removable SSD modules, but they are flash storage controlled by the T2 directly rather than being more independent, meaning a regular SSD cannot be used instead.

The Mac Pro is an exception, with its swappable SSDs, SATA ports, and space for PCIe cards.

There are some exceptions, such as how the Mac Pro has SATA ports alongside other upgrade opportunities, such as SSD module upgrade kits. However, given the Mac Pro is an expensive computer for use in business rather than by consumers, it is expected that there be some sort of way for Mac Pro owners to upgrade and repair their investments over time.

It is still possible to add more storage if it’s external, such as with a NAS or an external drive attached to a Thunderbolt 3 port, with the latter offering the better data transfer speed. Such upgrades can be done, but not without sacrificing physical space or the appearance of the Mac, or in the case of MacBooks, portability without using an SSD designed for mobile use.

For modern Mac users wanting to buy a new model, they’re basically stuck with a limited set of drives that aren’t really upgradable. Whatever storage is configured at the time of purchase is what the customer will have to live with, until they either move to use external storage or buy a new model.

Older models that aren’t stuck with soldered flash memory are more likely to be upgradable internally, but at the cost of having to deal with a generally slower Mac compared to newer versions.

If you need to upgrade your storage, your best bet is to buy an external drive, hook it up to Thunderbolt 3, and grin and bear it.