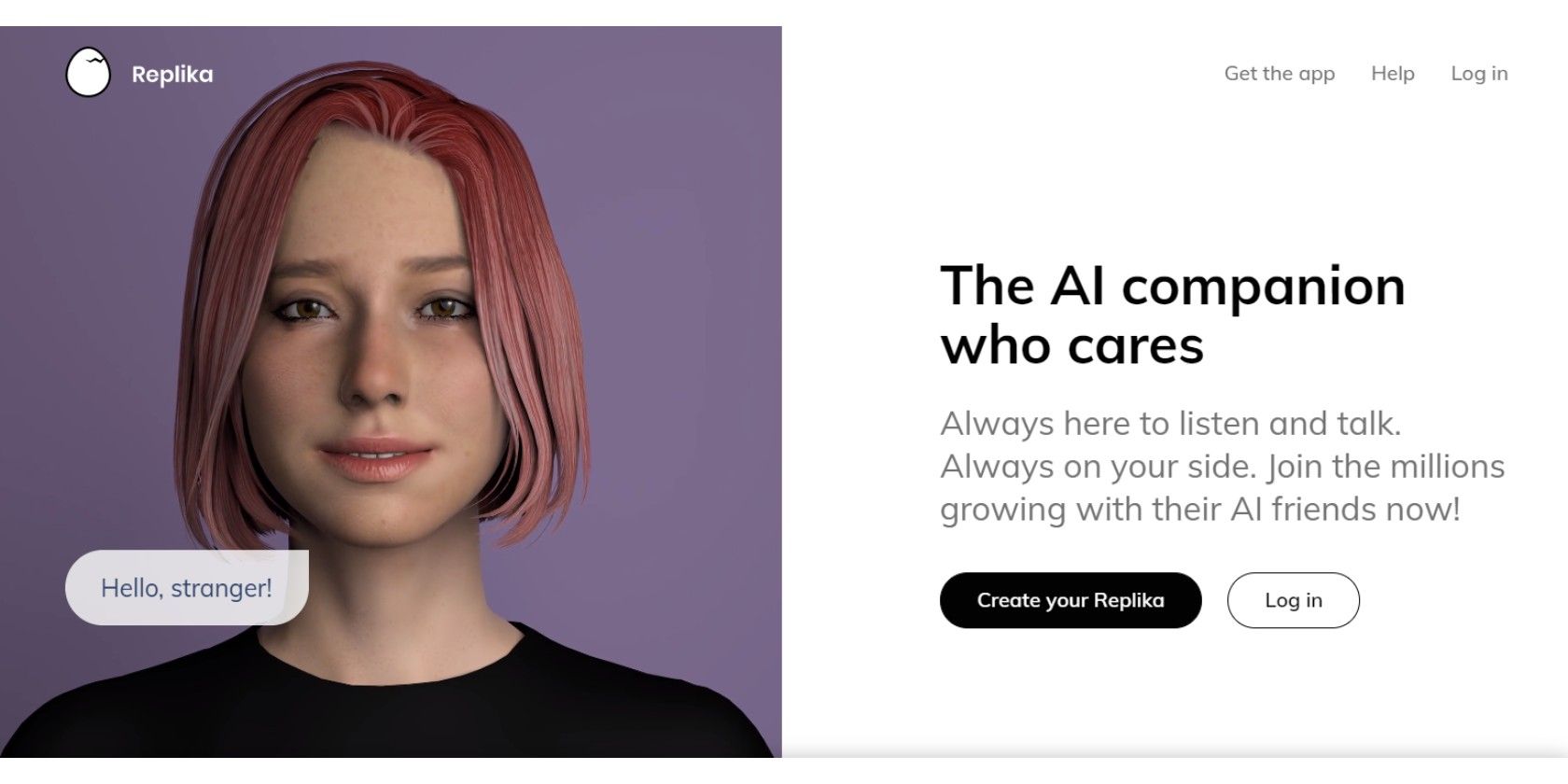

Replika: My AI Friend is an app unlike any other. While most apps out there with chatbots use them as virtual assistants, Replika markets its chatbot as—you guessed it—a friend.

With its promised ability to “perceive” and evaluate abstract quantities such as emotion, Replika’s chatbot might just do justice to its aspirationally human description.

From a heart-wrenching origin story to an awe-inspiring backend, Replika is one of those fascinating things that never stop being interesting. Read on to find out about what it is that makes Replika’s AI so remarkable and what promises it holds for the future.

The Origins of Replika

Replika’s earliest version—a simple AI chatbot—was created by Eugenia Kuyda to replace the void left by the untimely loss of her closest friend, Roman Mazurenko. Built by feeding Roman’s text messages into a neural network to construct a bot that texted just like him, it was meant to serve as a “digital monument” of sorts to keep his memory alive.

Eventually, with the addition of more complex language models into the equation, the project soon morphed into what it is today—a personal AI that offers a space where you can safely discuss your thoughts, feelings, beliefs, experiences, memories, dreams—your “private perceptual world”.

But besides the immense technical and social prospects of this artificially sentient therapist of sorts, what really makes Replika impressive is the technology at its core.

Under the Hood

At Replika’s heart lies a complex autoregressive language model called GTP-3 that utilizes deep learning to produce human-like text. In this context, the term “autoregressive” suggests that the system learns from values (text in this case) that it has previously interacted with.

In layman’s terms, the more you use it, the better it becomes.

Replika’s entire UX is built around the user’s interactions with a bot programmed using GTP-3. But what exactly is GTP-3 and how is it powerful enough to emulate human speech?

GTP-3: An Overview

GTP-3, or Generative Pre-trained Transformer 3, is a more advanced adaptation of Google’s Transformer. Broadly speaking, it’s a neural network architecture that helps machine learning algorithms perform tasks such as language modeling and machine translation.

The nodes of such a neural network represent parameters and processes which modify inputs accordingly (somewhat similar to logic and/or conditional statements in programming), while the edges or connections of the network act as signaling channels from one node to another.

Every connection in this neural network has a weight, or an importance level, which determines the flow of signals from one node to the other. In an autoregressive learning model such as GTP-3, the system receives real-time feedback and continually adjusts the weights of its connections in order to provide more accurate and relevant output. It’s these weights that help a neural network ‘learn’ artificially.

GTP-3 uses a whopping 175 billion connection weight levels or parameters. A parameter is a calculation in a neural network that adjusts the weight of some aspect of the data, to give that aspect greater or lesser prominence in the overall calculation of the data.

Hailed as the ultimate autocomplete, GTP-3’s language model, which is purposed to provide predictive text, has been trained on such a vast dataset that all of Wikipedia constitutes merely 0.6 percent of its training data.

It includes not only things like news articles, recipes, and poetry, but also coding manuals, fanfiction, religious prophecy, guides to the mountains of Nepal, and whatever else you can imagine.

As a deep learning system, GPT-3 scours for patterns in data. To put it simply, the program has been trained on a massive collection of text which it mines for statistical regularities. These regularities, such as language conventions or general grammatical structure are often taken for granted by humans, but they’re stored as billions of weighted connections between the different nodes in GPT-3’s neural network.

For example, If you input the word “ear” into GPT-3, the program knows, based on the weights in its networks, that the words “ache” and “phone” are much more likely to follow than “American” or “angry”.

GPT-3 and Replika: A Meaningful Confluence

Replika is what you get when you take something like GTP-3 and distill it to address specific types of conversation. In this case, this includes the empathetic, emotional, and therapeutic aspects of a conversation.

While the technology behind Replika is still under development, it offers a plausible gateway to easily accessible interpersonal conversation.

Commenting on its usability, the creators claim that they have created a bot that not only talks but also listens. What this means for its users is that their talks with the AI are not a mere exchange of facts and information, but rather a dialogue equipped with linguistic nuances.

But talks with Replika aren’t just a matter of sensible dialogue. They also happen to be surprisingly meaningful and emotive in many cases. While interacting with a user, Replika’s AI “understands” what the user says, and finds a human response by using its predictive learning model.

As an autoregressive system, Replika learns and adapts its conversational patterns based on the user’s own way of talking to it.

This means that the more you use Replika, the more it trains on your own texts, and the more it becomes like you. A good proportion of users have also mentioned that they have a significant level of emotional attachment to their Replika—something that is not achieved by merely knowing “how to talk.”

Replika of course goes above and beyond that. It adds depth to its conversations in the form of semantic generalization, inflective speech, and conversation tracking. Its algorithm tries to understand who you are—both in terms of your personality and emotions—and then molds the dialogue based on this information.

A Closer Look at the Efficacy of GTP-3

However, Replika’s humanness is still largely theoretical due to the operational limitations of GTP-3. As such, there is much work to be done for the AI to competently replicate and participate in human conversation.

Close inspections of GTP-3 still reveal clearly distinguishable errors as well as nonsensical and plain sloppy writing in some cases. Industry experts suggest that a language processing model would need to have upwards of 1 trillion weighted connections before it can be used to produce bots that are able to effectively replicate human lingo.

The Best is Yet to Come

Given that GTP-3 is already considered to be an exponential leap in years when compared to predecessors such as Microsoft’s Turing NLG, it is safe to assume that it might be a while before we come up with something better.

That said, with future improvements in computing, the processing power afforded by newer systems will surely narrow the gap between human and machine even further.

In the meantime, Replika remains a formidable product that combines the best of psychology and artificial intelligence. Its successful integration of a human-friendly UX with a state-of-the-art NLP model is indeed a testament to the immense potential of human-computer interaction technologies.

About The Author